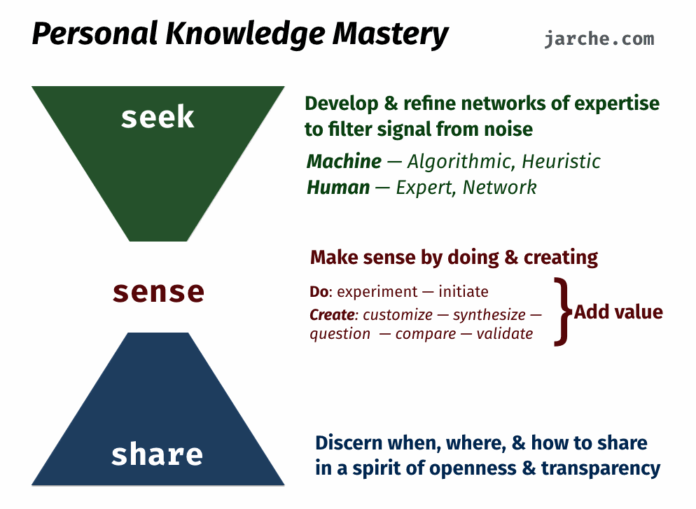

The picture under is one I’ve typically utilized in explaining sensemaking with the PKM framework. It describes how we will use various kinds of filters to hunt info and information after which apply this by doing and creating, after which share, with added worth, what we’ve discovered. One rising problem right now is that our algorithmic information filters have gotten dominated by the output of generative pre-trained transformers based mostly on massive language fashions. And increasingly more, these are producing AI slop. Which implies that machine filters, like our serps, are now not trusted sources of knowledge.

Because of this, we’ve to construct higher human filters — consultants, and subject material networks.

As serps and productiveness instruments preserve regurgitating the identical — or a variation of — slop, we transfer towards “an orthodoxy that ruthlessly narrows public thought” (John Robb). Generative AI and their hidden algorithms are hacking away at three issues that human organizations must study, innovate, and adapt — range > studying > belief.

We have to ditch these sloppy instruments and deal with connecting and speaking with our fellow people. Carry on producing human-generated writing, like blogs, and use social media that isn’t algorithmically generated, like Mastodon. We now have simply completed a PKM workshop with a worldwide cohort and the consensus from contributors is that abilities reminiscent of media literacy, essential considering, and curiosity are nonetheless important for making sense of our technologically related world.